About Me

I create smart machines that free people from boring tasks and enable them to do what they are most good at. For the past ten years, I built machines that can sense, think, and act, in places like factories, farms, and homes. I combined my expertise in multiple fields to create these smart machines. My expertise is in the fields of machine vision, machine learning, Internet-of-Things, embedded systems, and mechatronics.

Professional Profile

- Commercial product development, from prototyping to mass production.

- Optimization of computer vision and machine learning algorithms on custom hardware.

- Internet-of-things devices, edge and cloud platforms for machine learning applications.

- Implementation of electronics systems, from chip design to firmware/software stack.

- Integration of electronics systems into mechatronics and robotics systems.

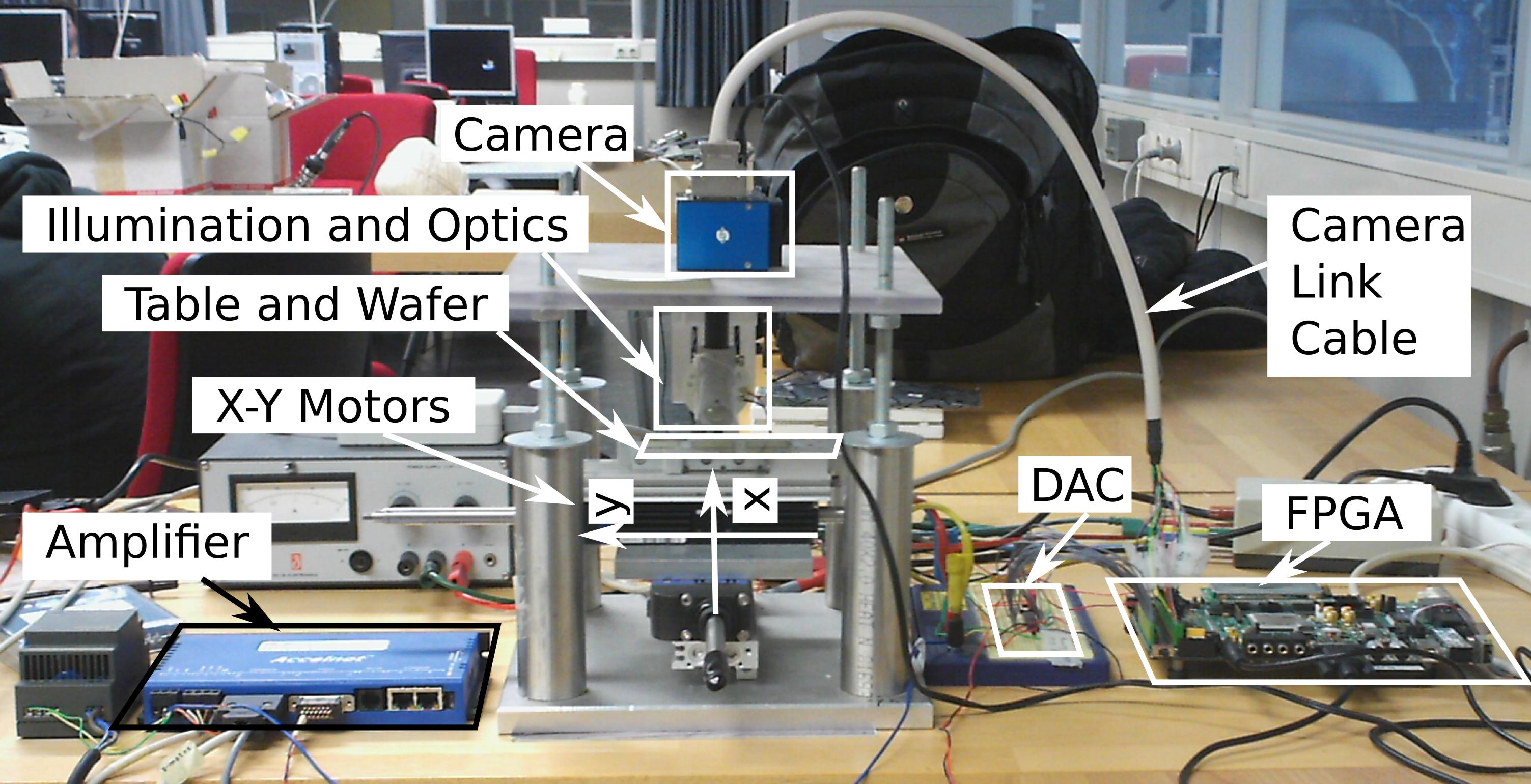

Figure 1: 1000 fps vision-in-the-loop system.

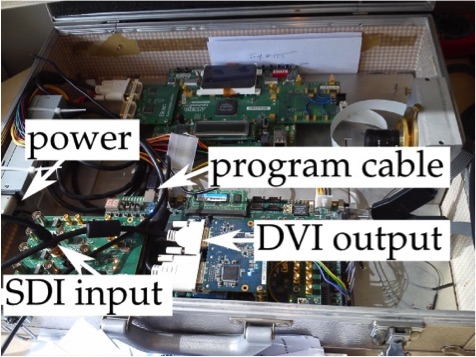

Figure 1: 1000 fps vision-in-the-loop system. Figure 2: FPGA system running 1080p 2D-to-3D conversion at 30fps.

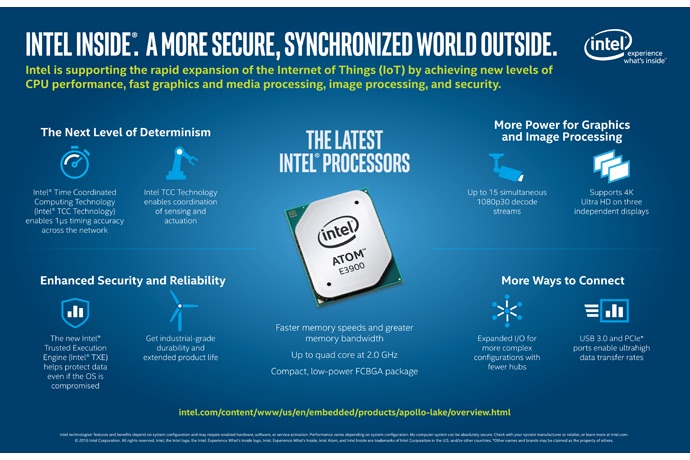

Figure 2: FPGA system running 1080p 2D-to-3D conversion at 30fps. Figure 3: Image signal processor of Intel (top right part).

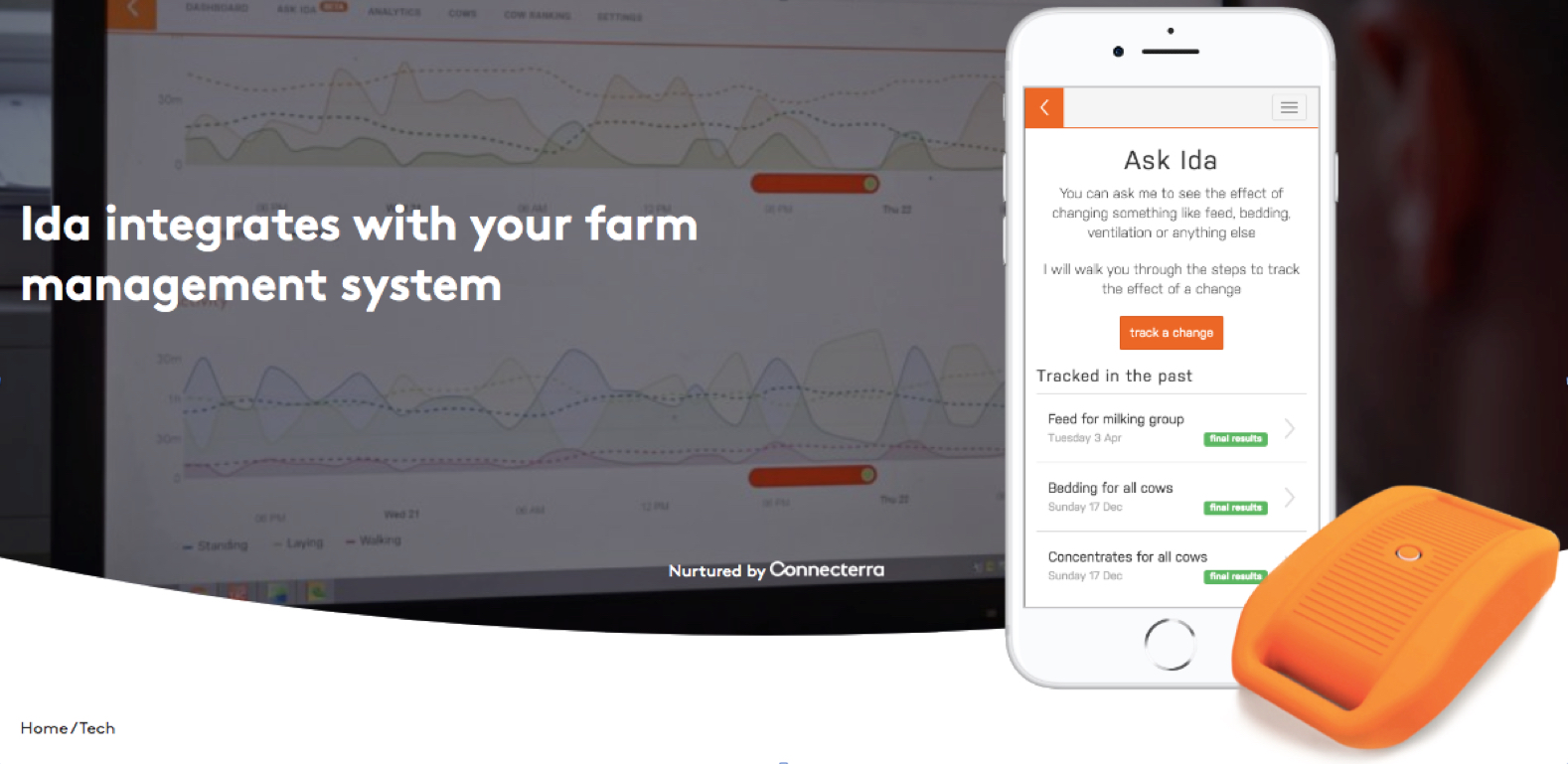

Figure 3: Image signal processor of Intel (top right part). Figure 4: IDA sensor. More info on

Figure 4: IDA sensor. More info on  Figure 5: Caspar smart home device.

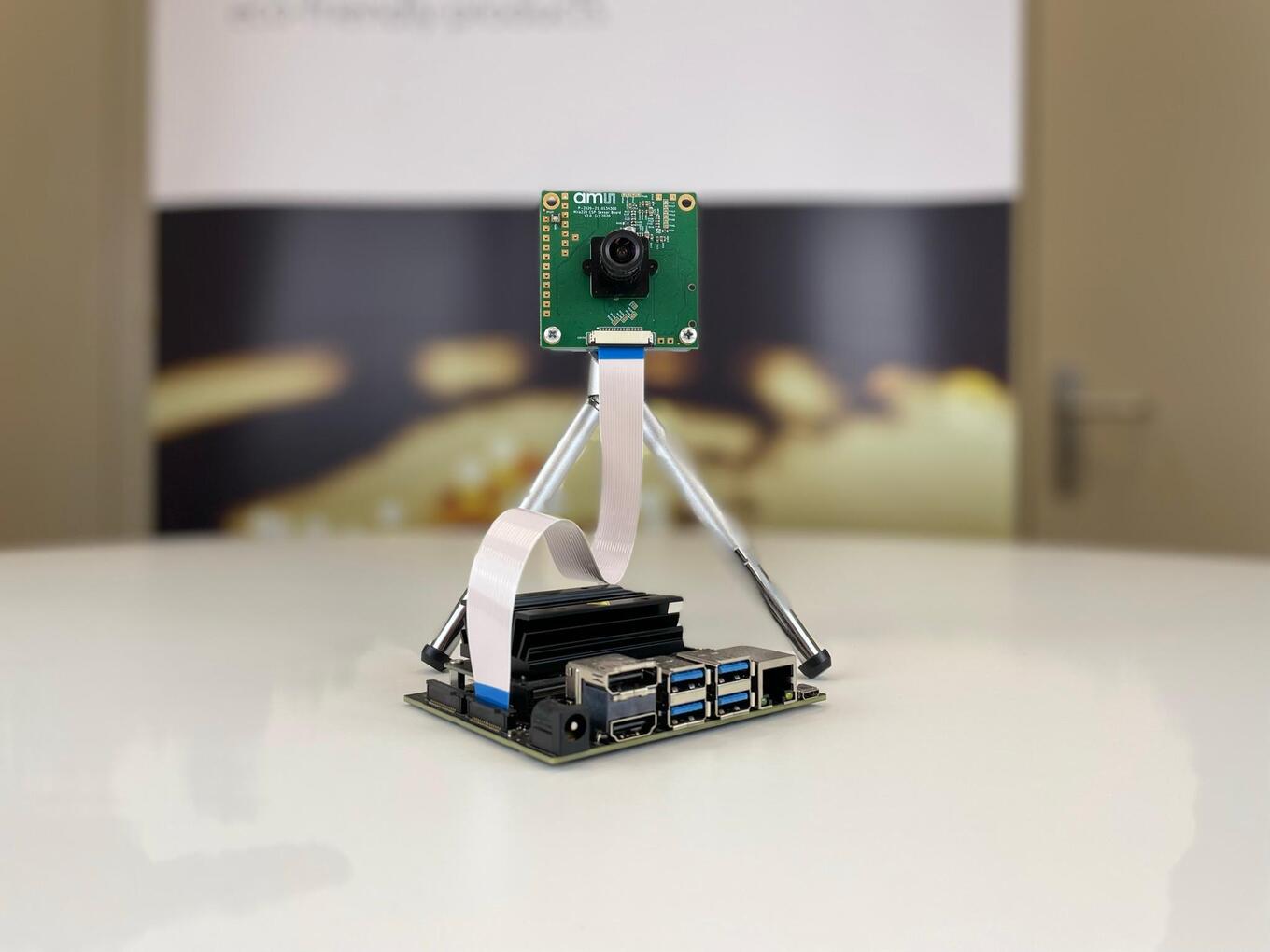

Figure 5: Caspar smart home device. Figure 6: ams OSRAM image sensor connected to an evaluation kit.

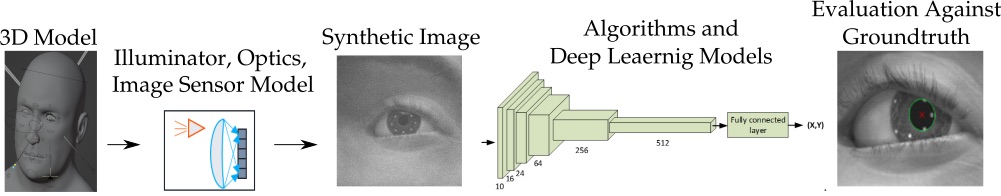

Figure 6: ams OSRAM image sensor connected to an evaluation kit. Figure 7: End-to-end modeling framework for AR/VR applications.

Figure 7: End-to-end modeling framework for AR/VR applications. Figure 8: Current generation of Bosch DICENTIS multimedia device in conference system.

Figure 8: Current generation of Bosch DICENTIS multimedia device in conference system. Figure 9: Current generation of intelligent LED OSIRE E3731i for automotive ambient lighting.

Figure 9: Current generation of intelligent LED OSIRE E3731i for automotive ambient lighting.